Is It Your Solar Panel or Your Neighbour's?

Why 2D needs 3D: correcting building perspective in orthophotos

Detecting solar panels on roofs sounds straightforward: just like for swimming pools, cut up high resolution aerial imagery around each roof and apply supervised image recognition techniques. However, there’s a big issue with that plan: the image of the roof can be misaligned, and even sometimes completely outside of the cadastral building footprint, so even the best image recognition algorithm will make mistakes by assigning solar panels to the wrong building.

I'm interactive!

What is going on? Is the polygon wrong or is the picture poorly georeferenced? It turns out both are correct, and we just bumped into a perspective issue of orthophotos.

What is an orthophoto?

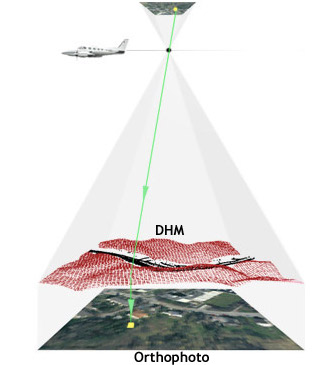

Producing high resolution aerial imagery for extended areas is challenging: it involves flying a plane at low altitude, taking lots of pictures with a high resolution sensor and recording the exact position of the plane when each picture is taken.

The process of combining these pictures together into one coherent, global, georeferenced image is known as Orthorectification, and takes into account the georeferencing of each picture, the optical distortion of the sensor, as well the 3D geometry of the ground which may not be flat.

source: geoplana.de

If this process is well understood, why does it look like building footprints are misaligned when plotted on top of aerial imagery?

The origin of the problem

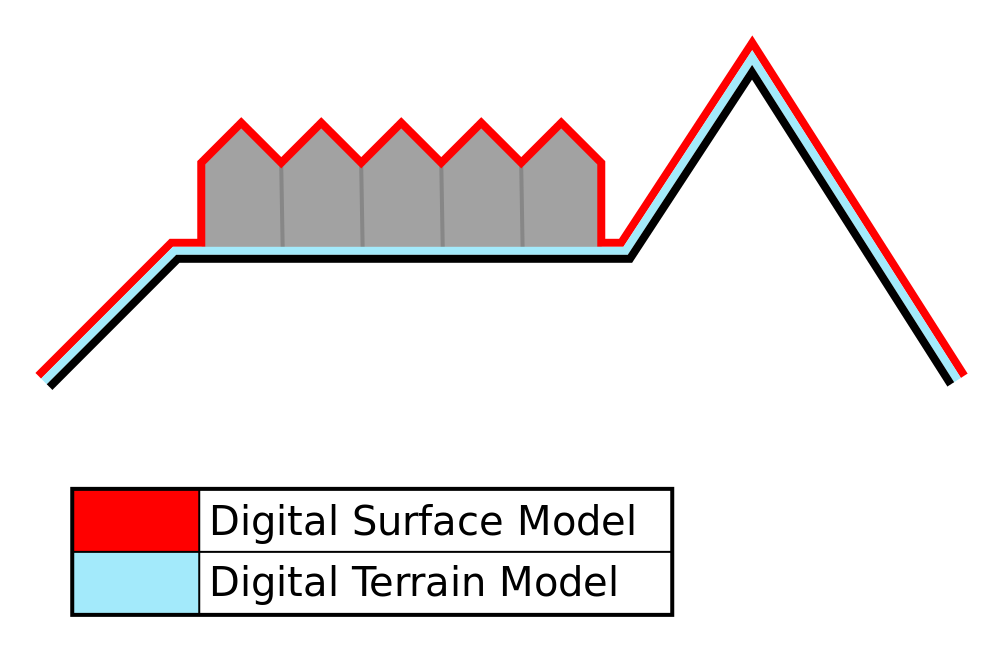

It turns out that imagery providers often use a Digital Terrain Model (a 3D model of the ground altitude) in order to orthorectify their photos. This is enough for many applications, but completely disregards buildings and other objects above the ground. So the bottom of each building is aligned correctly, but roofs are offset depending on their height.

One possible solution could be to use a Digital Surface Model instead, that is a 3D surface including any object present in the photo. One would then need to re-process the raw data to provide corrected orthophotos.

source: wikipedia.org

Towards a pragmatic solution

While high resolution orthophotos are abundant, the raw data to produce them currently isn’t. We took another route to match aerial imagery of roofs with the correct buildings: using 3D models of the roof panes (which we’re modelling using Lidar data, see this dedicated blog post) and inferring the location of the plane at the time the picture is taken, we’re successfully able to align roofs with aerial imagery.

For now, we have determined the plane location manually. Based on our observation, it seems the plane taking the above photograph was flying around 600m above the ground. We have confirmed that this approach makes sense: knowing plane position suffices to solve the problem. The next step is to determine it automatically based on imaging techniques, which will be for another blogpost.

Conclusion

Overcoming perspective issues is a necessary step not only for solar panel detection, but for any detection task on roof imagery such as determining the roof type, presence of chimneys and veluxes. To solve this problem, we either need to re-process raw images using a digital surface model, or in absence of raw data to automatically infer the plane position from the current orthophotos. Going from manual positioning (like above) to automatic requires quite some work, which remains to be done. Ultimately, we wish to encourage the ongoing open data efforts and extend them to opening up raw data, widening the range of (re-)use cases.